In a recent paper, I seem to have misled anyone who might try to replicate my work. The paper is here, and the full reference is:

“Long-Range Prosody Prediction and Rhythm”, Greg Kochanski, Anastassia Loukina, Elinor Keane, Chilin Shih and Burton Rosner, University of Oxford, Speech Prosody 2010 100222:1-4. (Note that “100222” is the volume number.)

The problem is in the sentence “…where D is the running duration measure from [3][9]” in Section 2..2, point 2. Reference [3] is to a 2006 paper of mine that describes an algorithm for computing running duration. (G. Kochanski, E. Grabe, J. Coleman, and B. Rosner, “Loudness predicts prominence: Fundamental frequency lends little,” J. Acoustical Society of America, vol. 118, no. 2, pp. 1038–1054, 2005. here ) Unfortunately, it’s not the same algorithm. During the last four years, I had changed the algorithm that I routinely use.

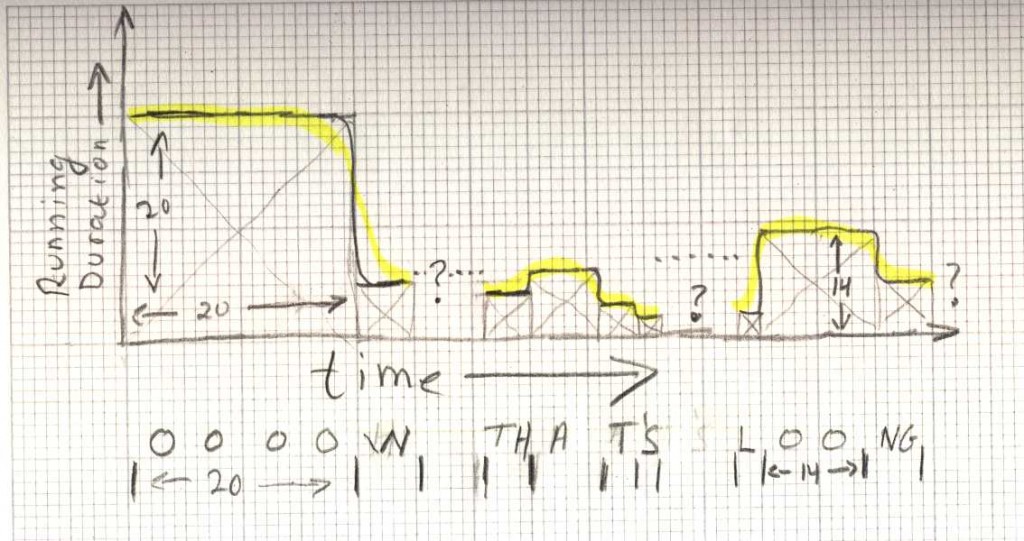

The idea behind the algorithm is that it continuously measures how long each sound is stable. If you make an “oooo” sound that lasts 2.0 seconds, the algorithm should give a value near 2.0 at any moment within that sound. Then, if you follow it with a shorter “w” sound, the value the algorithm produces should be small inside the “w”.

For those of you who like to think in terms of phonemes, you can approximate it as a bit of pseudocode:

for all times t in the data file {

find out which phoneme you are in at time t

the returned value at time t is the duration of that phoneme.

}

(I emphasize that the above pseudocode is wrong in every detail. It’s just intended to help get your brain around the idea of a continuously changing measurement of duration. The real algorithm can be found here by going to the “pseudoduration” script.)

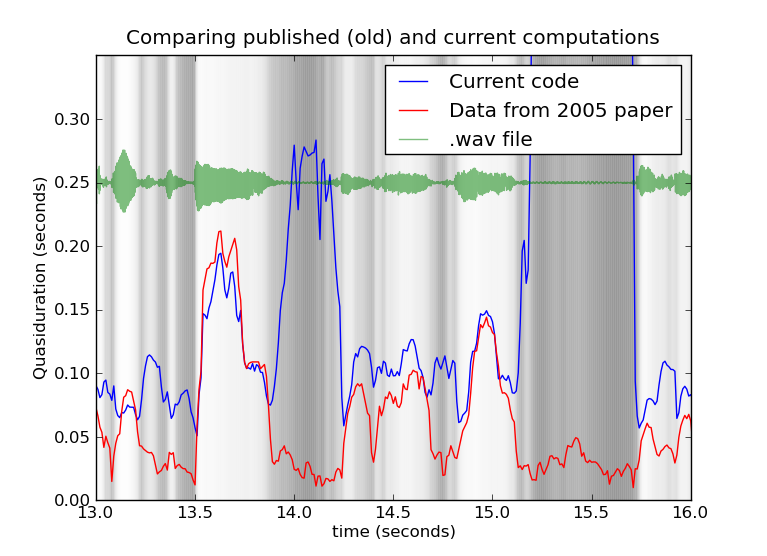

Here’s a comparison between the old algorithm (red) and the new one (blue). You can see that the two algorithms match pretty well in the loud regions, but are drastically different in the silences. (Silences are the vertical dark regions (the darkness shows the loudness at each point), as you can see from the green speech waveform. This is computed from the l-reg3-f1 audio file in the IViE corpus; it’s the same audio file used in Figure 1 of the 2005 paper.)

The new algorithm gives a more sensible value in silences: it’s value is approximately equal to the length of the silence. The old version just gave a small value near zero. The new algorithm is also written to be closer to the way the ear processes sound: it uses frequency bands that match the ear’s and includes the knowledge that perceived loudness is approximately the cube-root of the acoustic power in each band. So, I think it’s an improvement.

But, certainly, it’s not the same algorithm, so inserting reference [3] was not correct. Abetter sentence would have been “…where D is the running duration measure from [9], which was evolved from the algorithm presented in [3]”.

How did it happen? Primarily, I was in a rush. The scientific world is very much a case of “publish or perish”, and the deadline for a good conference was approaching. It was very easy just to grab the reference where I had first described the idea, and not ask myself if it had changed since then.

Partially, I was thinking about saving space. These conference papers are strictly limited to four pages, which is not a lot to describe an experiment that involves complicated software. Add eight words here, and you have to remove eight words somewhere else. So, one trims the descriptions to the bone, ideally, leaving just enough for another expert to understand. This time, it was trimmed a bit too much.

Loose ends:

- I haven’t checked the algorithm in reference [9] to make sure it’s identical to the one described here. That’ll involve excavating an old version of my code. That’s possible: everything is kept in a subversion repository (except the really old stuff which is in CVS), so I have all the old versions. Subversion lets me know what code was being used on what day (almost). The “almost” revolves around the fact that subversion keeps track of the code that you have committed to a repository, not the actual code that is doing the computation, so there will still be some ambiguity.

- Mark Liberman tells me that the value of “C” in the 2005 published description is wrong. That seems to be true, but I haven’t yet sorted out what the correct value is. I have done some excavations in CVS and the description of the old algorithm seems correct, but the “C” value doesn’t match. My suspicion is that “C” may have come from some intermediate version of the code, afterwards, when I was writing the paper. However, the figures in the 2005 paper (e.g. Figure 1) were certainly computed with the same algorithm that was used to produce the results. So, I believe the 2005 paper is be entirely consistent, except that “C” is wrong. I don’t think this problem affects any of the conclusions; it just makes it harder for someone to reproduce them.